How Can Organizations Leverage Their Data to Use It with ChatGPT?

Contents

ChatGPT possesses a remarkable ability to create text utilizing the knowledge from its training dataset. However, since it was trained on publicly available information only, it may not be able to provide valuable responses when asked about private matters, such as those related to your organization.

Organizations generate an enormous amount of data that can be leveraged to make informed decisions. However, handling this data can be challenging, and organizations need to find efficient ways to extract insights from it. One such way is by utilizing artificial intelligence (AI) models like ChatGPT. However, to use ChatGPT effectively, organizations need to find ways to inject their data into the model, which can be done using techniques such as fine-tuning and embeddings.

ChatGPT is an LLM (large language model) designed to generate text using probability to determine the next word in a sentence. In order to utilize organizational data, various methods such as fine-tuning the model or embeddings can be applied to inject information into the model with the latter approach being a more interesting solution.

The power of embedding

Embedding is all about the mathematical representation of text. More precisely, using a vector (the same as you learned about in math lessons). Similar words will be closer together (they have numerical representations) if we look at them together on a graph. See a visualization to get a better overview:

Source: tensorflow

The picture displays a graphical representation of words in which certain groups of them are distinguishable, such as the word "drummer" being linked to music-related subjects. Additionally, there is a group of names located at the bottom and a collection of professions depicted on the left-hand side. This showcases the effectiveness of embeddings. We can leverage this tool at present.

We can put organizational knowledge in embeddings through writing all the words and sentences into vectors. Let's take our mobile app development offering. As you can see, there is a wealth of information available on our mobile application development process. We can convert it into a mathematical representation, and also convert our query.

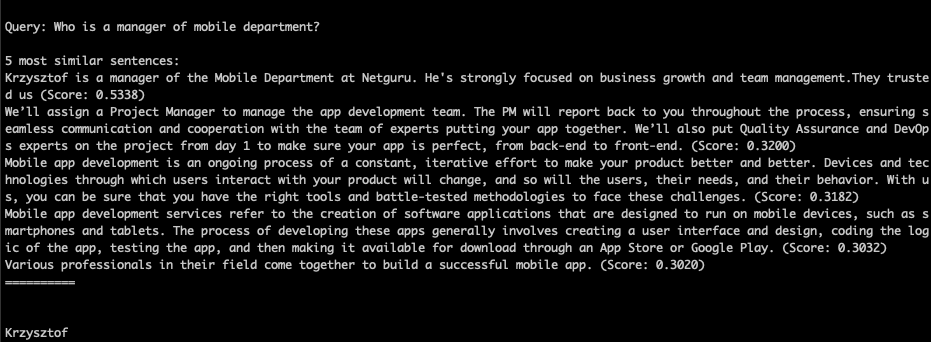

To begin with, suppose we start with the question "Who is the manager of the mobile department?". We have a mathematical representation of text and the query in vectors. By using cosine similarity, we can identify vectors that are similar to the query.

The initial sentence is precisely the information we are seeking. Indeed, Krzysztof is the manager. However, the algorithm also retrieved some other details about project managers and processes, but with significantly lower scores. We can easily modify the algorithm to only consider high scores by default.

Now we can leverage large language models, e.g., ChatGPT, GPT-3, GPT-4, or any other. We create a prompt and put our findings in there. The easiest one is to use an instruct-tuned model, so we can tell it what to do.

“You are an LLM. Your job is to answer the query based only on the most similar embeddings. Along with them, you will get a cosine-similarity score between generated sentences and the query. Answer based on provided input only.”

And let's pass our input:

Query example: “Who is the manager of the mobile department?”

The five most similar sentences:

“Krzysztof is the manager of the Mobile Department at Netguru. He's strongly focused on business growth and team management. They trusted us (Score: 0.5338)

We’ll assign a project manager to manage the app development team. The PM will report back to you throughout the process, ensuring seamless communication and cooperation with the team of experts putting your app together. We’ll also put quality assurance and DevOps experts on the project from day one to make sure your app is perfect, from back-end to front-end. (Score: 0.3200)

Mobile app development is an ongoing process of a constant, iterative effort to make your product better and better. Devices and technologies through which users interact with your product will change, and so will the users, their needs, and their behavior. With us, you can be sure that you have the right tools and battle-tested methodologies to face these challenges. (Score: 0.3182)

Mobile app development services refer to the creation of software applications that are designed to run on mobile devices, such as smartphones and tablets. The process of developing these apps generally involves creating a user interface and design, coding the logic of the app, testing the app, and then making it available for download through the App Store or Google Play. (Score: 0.3032)

Various professionals in their field come together to build a successful mobile app. (Score: 0.3020)”

Result: The manager of the Mobile Department at Netguru is Krzysztof.

Query example: “Can you make applications for free?”

The more complicated query is: “Can you make an application for free?”

It seems like language models are unreliable, but can we bypass this feeling? Let's ask about making an application for free. First things first: embeddings and similarity check.

There is no information about development, but we offer a free consulting session with our team. Let's prompt e ChatGPT in the same way as before.

Based on the provided input, there is no sentence that directly answers the query. However, there is a sentence that mentions a "free consulting session" with a mobile app developer. It is possible that during this session, the possibility of getting a free application may be discussed.

The result is for me what it should be. Based on the text, you can get a consulting session. It did the job.

Large language models on local machines only

What about parsing private data that can exist only on your own device? Is there a viable approach for this? There exist numerous smaller language models, however, their primary limitation is an inability to produce satisfactory outcomes independently. Could implementing embeddings atop these models potentially improve their performance?

We used a much smaller model – DistilBERT.

This tool is extremely speedy and compact, and, evidently, it offers sufficient details. Although the response may not be as elaborate as before it solely presents precise information pertaining to your inquiry and doesn't produce a polished write-up.

Even with more complex queries, the tool still produces satisfactory outcomes. And all of this happens locally on our machine, without requiring an internet connection.

DistilBERT vs GPT-3

DistilBERT is a smaller and faster version of the popular BERT (bidirectional encoder representations from transformers) model. It was developed by Hugging Face, a popular NLP (natural language processing) company. Compared to readily available models like GPT-3, DistilBERT boasts several advantages:

- Size – DistilBERT's size is significantly smaller than that of GPT-3. While GPT-3 contains 175 billion parameters, DistilBERT has only 66 million. This feature makes DistilBERT a more convenient option for utilization and implementation, particularly on devices with constrained resources.

- Speed – GPT-3 necessitates a substantial amount of computational power to create text, whereas DistilBERT can do so at a significantly faster pace. As a result, DistilBERT is more suitable for real-time applications.

- Cost – DistilBERT is a more cost-effective alternative to GPT-3, which requires an abundance of computational resources that can prove to be costly. In contrast, DistilBERT can be executed on a single GPU, which is a much more economical option.

- Training Data – DistilBERT's training dataset is comparably smaller than that of GPT-3, which utilizes an enormous dataset containing a substantial amount of noise and unrelated data. Conversely, DistilBERT's training dataset is more targeted and pertinent to the given task.

- Fine-tuning – DistilBERT can undergo fine-tuning, the process of adjusting a pre-existing model to cater to a specific task, at a considerably faster rate than GPT-3. DistilBERT can be fine-tuned within a few hours, whereas GPT-3 can take several days, if not weeks.

To attain even more superior outcomes, we can concentrate on the fine-tuning process to customize this model according to our use case. Fine-tuning refers to modifying pre-existing models to cater to a specific problem domain. This process entails adjusting the weights and biases of the pre-trained model to enhance its performance on the targeted task.

The fine-tuning approach is frequently employed in transfer learning, whereby a pre-existing model is utilized as the basis to tackle a novel problem. To achieve optimal performance, the fine-tuning process demands meticulous examination of the data, model architecture, and training parameters.

Benefits of the GPT tools family for businesses

One of the main advantages of GPT is that it can help businesses save time and resources by automating the processes:

- Improved email writing – As an AI language model, ChatGPT can generate professional and compelling emails for business purposes by utilizing natural language processing techniques and a diverse range of pre-existing templates and structures.

- Article and post generation – Advancements in AI have led to the creation of written texts that are so sophisticated and eloquent that it can be difficult to discern whether they were authored by a machine or a human being. However, factual information still needs to be double checked by a content specialist.

- Enhanced search experience – Your organization can enhance the search experience by utilizing GPT models with your own data, enabling natural language queries. This will allow your clients or employees to engage in chat-based conversations to obtain the desired information.

- Improved SEO outcomes – How effortless is it to discover information on your website? Utilizing embeddings means conducting verifications, while using GPT ensures that even machines can understand the content of your webpage.

- Revise procedures – to include a feature where instead of searching for information, one can ask the computer to do it for them.

- Coding – GPT is able to create a ready-to-use mobile app or event to create a product in a couple of minutes. However, there are code size limitations. For now, GPT is able to provide you with only a small amount of code.

Can you trust GPT?

In most cases yes. GPT is a language model that employs deep learning to produce text that resembles human writing. It has been extensively trained on a vast collection of textual information and can generate content that is coherent and appropriate when given a prompt or question.

It's important to remember that GPT can be biased or predict wrong answers because of the dataset it was trained on.

To effectively utilize GPT, it is crucial to consider both the available information and the training dataset. The type of data being used can greatly affect the output generated by the model. For example, if we were to train GPT using Wikipedia, we would have access to a vast amount of scientific literature with potentially complex sentences that may be difficult to comprehend.

However, if we were to use Twitter data, the output generated by the model would likely be short and biased towards people's opinions. It is important to carefully consider the training dataset for GPT, as it greatly impacts the quality and type of responses that can be generated.

It is therefore crucial to exercise caution when utilizing GPT and verifying the accuracy and relevance of its output. Care must also be taken to incorporate diverse and unbiased training data, as well as to thoughtfully construct prompts and queries to avoid any bias or false information. As such, it is vital to be prudent in order to avoid unfounded conclusions and to maintain reliability of the information presented.

Summary

ChatGPT is a large language model that generates text based on its training dataset. However, if one wants to ask about private or organization-specific information, injecting data into the model is necessary.

Embeddings, which are mathematical representations of text, can be used to leverage organization knowledge. By converting an organization's information into mathematical representation, a cosine-similarity score can be used to find similar vectors. The most similar sentences are then used to answer a query.

The GPT family can be used to enhance the search experience by enabling natural language queries, improving SEO outcomes, and allowing users to ask the computer to find information for them. However, it is important to use GPT with caution and to verify the accuracy and relevance of the text it generates. The dataset used to train the model must be diverse and unbiased, while prompts and queries must be carefully crafted to avoid bias or misleading information.