How to Integrate AI Into Legacy Systems? A Practical Guide

Contents

Did you know businesses integrating AI in legacy systems have experienced up to an 18% increase in productivity? That's not all - companies implementing strategic AI use cases are nearly 3 times more likely to exceed their ROI expectations, according to Accenture.

Modernizing outdated infrastructure poses significant challenges for many organizations today. Legacy system transformation requires more than adding new technology. It demands a thoughtful connection of modern AI capabilities with existing architecture. Gartner's forecast reveals an interesting trend: by 2027, organizations will adopt small, task-specific AI models at a rate at least three times higher than general-purpose large language models. This points to a shift toward practical, focused AI integration approaches.

The rewards of proper implementation are impressive. One major offshore oil and gas operator deployed predictive maintenance AI across nine platforms, reducing downtime by 20% and increasing annual oil production by over 500,000 barrels. Corewell Health achieved similar success with its AI platform for clinical documentation, which allowed 90% of clinicians to give patients more undivided attention while reducing cognitive load by 61%.

This guide offers a practical, step-by-step approach to successfully integrating AI into your existing systems, without requiring a complete overhaul. You'll discover how to evaluate your current infrastructure, prepare your data, implement modular solutions, and scale AI capabilities that deliver measurable business results. Let's look at how you can transform your legacy systems with AI that works.

Evaluate Your Legacy System Before You Begin

Before jumping into AI integration, we need to thoroughly assess your legacy system. Understanding what you're working with determines the feasibility and approach for introducing AI capabilities to your existing architecture.

Identify system limitations and bottlenecks

Start by evaluating your current infrastructure to understand its architecture and constraints. According to Forrester and MongoDB research, 60% of CTOs describe their legacy tech stack as too costly and inadequate for modern applications. When examining your systems, watch for these common limitations:

- Outdated technology that lacks scalability for AI workloads

- Performance bottlenecks that slow down operations

- Security vulnerabilities that could be exploited

- Integration difficulties with modern cloud services

The "6 C's" framework helps prioritize your modernization efforts: cost, compliance, complexity, connectivity, competitiveness, and customer satisfaction. This assessment reveals which components need immediate attention before AI implementation.

Assess data quality and accessibility

AI effectiveness depends entirely on data quality. Legacy systems often store information in outdated formats or siloed databases, creating significant challenges. For reliable AI results, focus on:

Data cleaning and standardization to ensure accuracy and consistency in formats. This may involve de-duplication, error correction, and format standardization.

Data integration to connect various sources within legacy systems, creating a unified data environment. Poor data quality introduces bias and inaccuracies, leading to flawed outcomes in critical operations.

Map AI opportunities to business goals

Rather than implementing AI for its own sake, identify specific use cases aligned with business objectives. Organizations where AI teams help define success metrics are 50% more likely to use AI strategically.

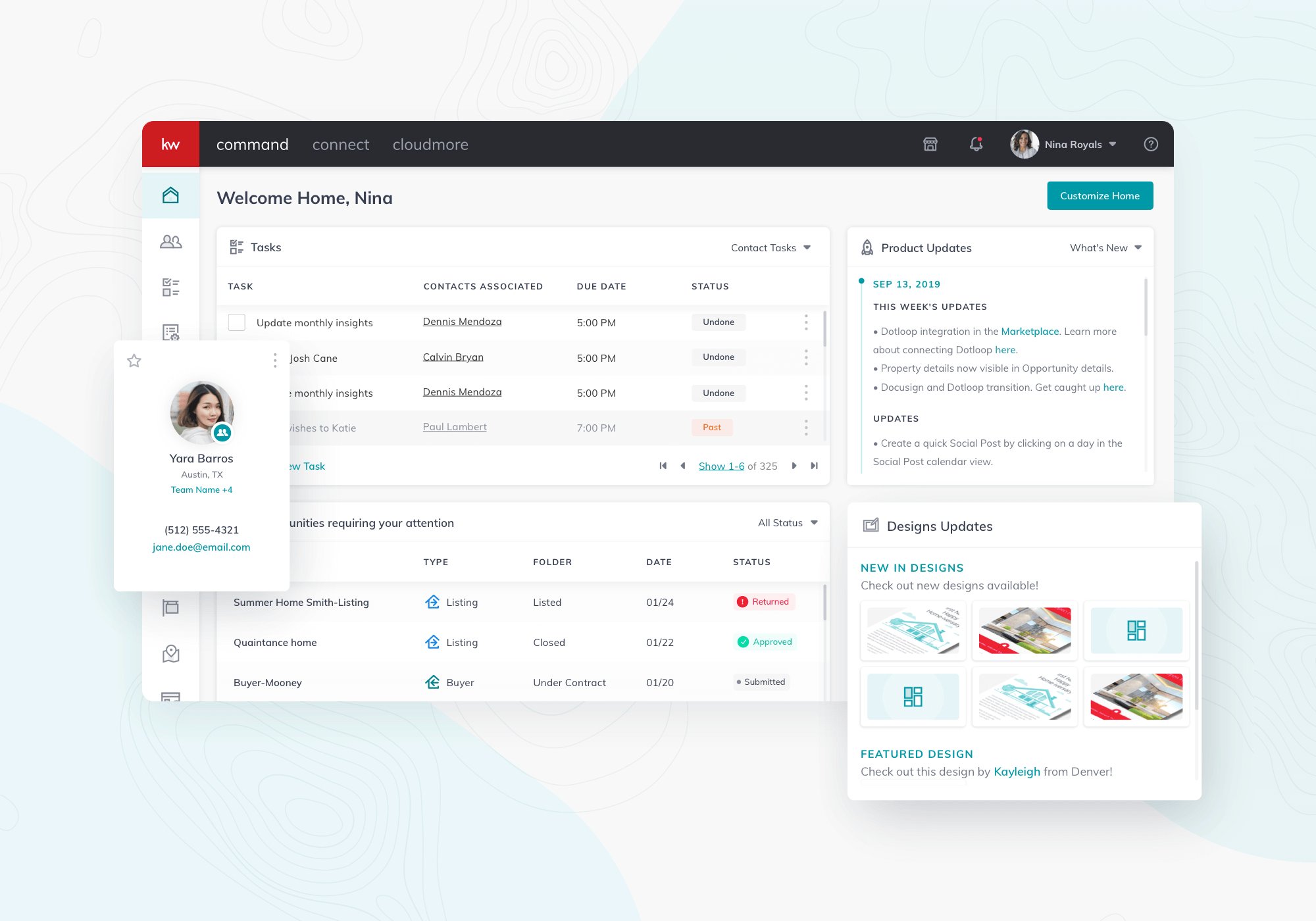

Keller Williams exemplifies this approach by connecting all their systems, including legacy infrastructure, to power AI applications like Command (their AI-powered CRM) and Kelle (an AI personal assistant). This integration was fundamental to their digital transformation strategy.

NewGlobe demonstrated a focused approach by integrating GenAI into their content creation process, connecting AI systems to teacher guide templates and content spreadsheets. Meanwhile, Newzip leveraged AI for hyper-personalization by developing a Proof of Concept that enhanced their existing platform with deeper personalization capabilities.

What's the best way to prioritize these opportunities? Evaluate use cases based on value generation versus implementation feasibility. Start with low-risk, high-reward AI projects to build confidence and demonstrate value before tackling more complex initiatives.

Prepare Your Data and Infrastructure for AI

Successful AI integration hinges on quality data preparation. Legacy systems typically store information in outdated formats that AI algorithms cannot readily process. This makes thorough data preparation your critical first step.

Clean and standardize legacy data

Data cleaning involves removing duplicates, correcting errors, and ensuring consistency across datasets. For effective AI implementation:

- De-duplicate and validate data to remove redundancies that could skew AI results

- Normalize formats to create uniform structures across all data sources

- Fill gaps in incomplete records that might otherwise lead to biased outcomes

- Label and categorize data appropriately, especially for supervised learning models

Organizations that prioritize data preparation see significantly higher success rates with their AI implementations. Poor data quality remains one of the top reasons AI projects fail.

Build data pipelines and APIs

Data pipelines automate the flow between legacy systems and AI applications. These pipelines typically handle collection, storage, cleaning, and transformation steps.

APIs and middleware solutions function as bridges between AI and legacy systems, facilitating data exchange while minimizing system modifications. Keller Williams used this approach by connecting all their systems to power AI applications like Command (their AI-powered CRM) and Kelle (AI personal assistant).

NewGlobe applied a similar strategy by connecting their GenAI system to teacher guide templates and content spreadsheets through APIs for seamless integration. Newzip also built API interfaces that connected their AI system to customer data, enhancing personalization capabilities.

Ensure compliance and security readiness

When preparing infrastructure for AI integration, security cannot be an afterthought. You'll need to implement:

Access controls limiting who can interact with AI-enhanced functionalities

Data encryption for information transferred between legacy and AI systems

Compliance monitoring to ensure all data handling meets regulations like GDPR or HIPAA

NewGlobe maintained security protocols throughout its AI integration process, ensuring compatibility with existing software. Preparing your infrastructure ultimately involves balancing innovation with the protection of sensitive information.

Integrate AI Using Modular and Phased Approaches

Implementing AI doesn't mean you need to dismantle your entire infrastructure. A modular, phased approach lets you build AI capabilities around your existing systems rather than inside them. This method minimizes disruption while maximizing benefits.

Use middleware and APIs for communication.

Middleware acts as a translator between legacy systems and modern AI capabilities, extending your infrastructure's lifespan without costly overhauls. Research published in Computer Science & Information Technology confirms this approach creates a sustainable path for organizations to use AI while preserving existing investments.

Key integration methods include:

- APIs and RESTful services that enable data exchange between legacy systems and AI models without altering core functions

- Middleware solutions that bridge communication gaps, typically require just 6-12 weeks for implementation versus months for full system replacements.

- Microservices architecture that provides modular integration, adding AI functionality without system-wide modifications

Keller Williams successfully applied this approach by connecting all their systems through middleware and APIs, powering AI applications like Command (their AI-enhanced CRM) and Kelle (AI personal assistant).

Start with low-risk, high-impact use cases

Focused, domain-specific AI implementations often yield the highest ROI in the shortest timeframe. For example, rather than overhauling an entire insurance claims processing platform, an AI-powered fraud detection model could operate as a separate service while delivering significant cost savings.

NewGlobe adopted this strategy when integrating GenAI into its content creation process. They connected their AI system to teacher guide templates and content spreadsheets through APIs, transforming content development into a streamlined, intelligent process.

Leverage edge computing where needed

For use cases requiring real-time processing or operation in bandwidth-constrained environments, edge computing brings AI capabilities closer to where they're needed. This approach offers compelling benefits for relatively low effort, particularly when:

- Processing sensitive data locally to enhance privacy

- Reducing latency for time-critical applications

- Operating in environments with unreliable connectivity

Smart edge devices can collect, process, and analyze high-frequency data locally without constant connection to cloud servers, then integrate insights into legacy systems without overwhelming them with unnecessary data.

Collaborate with IT and data teams

Successful AI integration demands effective collaboration between AI experts and IT professionals. Technical teams often approach problems from different perspectives – AI experts focus on functionality while IT teams concentrate on system compatibility.

To foster productive partnerships:

- Establish reliable communication channels between teams.

- Create a collaborative environment where AI experts explain technical requirements while IT teams clarify infrastructure constraints.

- Develop a shared roadmap outlining project goals, timelines, and technical milestones.

Newzip exemplified this collaborative approach when developing their AI-powered personalization system, with ongoing support between AI developers and the platform integration team ensuring seamless implementation.

Scale AI Systems and Align with Business Strategy

Once AI integration is underway, establishing robust measurement frameworks becomes critical for scaling and optimization. Successfully scaled AI implementations deliver measurable business impact while continuing to evolve with your organization's needs.

Track KPIs and measure ROI

Effective AI measurement requires a blend of qualitative and quantitative metrics. According to PwC's AI Business Value Report, AI integration can increase profitability by up to 38% by 2030. To demonstrate tangible value:

- Define clear objectives and KPIs before integration, such as reduced operational costs, increased sales, improved response times, or decreased manual errors.

- Calculate the Total Cost of Ownership (TCO) including software licensing, infrastructure investments, consulting fees, training, and ongoing maintenance

- Measure both direct and indirect benefits through customer surveys, Net Promoter Scores, or employee feedback

Organizations working with integration partners achieve 42% faster time-to-value and up to 30% higher operational efficiency gains compared to those managing integration internally.

Automate deployment and monitoring

AI systems require continuous optimization to maintain performance. Furthermore, organizations must:

- Implement automated deployment pipelines to keep AI solutions agile as data evolves.

- Establish regular audits to evaluate integration effectiveness.

- Use AI-powered analytics tools to monitor performance metrics in real-time—companies using these tools report 33% higher ROI on AI investments.

Regular model retraining and updates are crucial to maintain accuracy and reliability, especially as data changes.

Foster adoption through training and UX improvements

User adoption significantly impacts AI integration success. Subsequently, organizations should:

- Invest in change management and training to address resistance

- Develop intuitive dashboards tailored to stakeholder needs

- Showcase successful pilots to build enthusiasm and overcome skepticism

- Modernize interfaces for delivering AI insights to different users

Examples: Keller Williams, NewGlobe, Newzip, Shine

NewGlobe integrated GenAI into content creation, reducing teacher guide creation time from 4 hours to 10 minutes—saving an estimated $835,000 annually.

Newzip achieved a 60% increase in engagement and a 10% increase in conversions through AI hyper-personalization.

ARC Europe cut insurance claim assessment time by 83%, reducing processing from 30 minutes to just 5 with a GPT-powered AI agent. The system delivers more consistent evaluations, reduces manual workload, and creates a scalable foundation for future automation across Europe’s largest roadside assistance network.

Conclusion

Integrating AI into legacy systems presents a valuable opportunity rather than an impossible challenge. We've seen throughout this guide how organizations can adopt practical, methodical approaches to modernization without complete system overhauls. Companies like Keller Williams have shown remarkable success by connecting their legacy infrastructure to power AI applications like Command and Kelle, significantly boosting their operational capabilities.

The evaluation of your existing infrastructure forms the foundation for successful integration. Understanding system limitations, data quality, and alignment with business objectives establishes a realistic roadmap. Thorough data preparation becomes essential—cleaning, standardizing, and building effective pipelines that connect legacy systems with new AI capabilities.

The modular, phased approach offers the most pragmatic path forward. NewGlobe exemplifies this strategy, having seamlessly integrated GenAI into their content creation process through APIs and compatible interfaces, reducing guide creation time from four hours to just ten minutes. Similarly, Newzip's implementation of AI for hyper-personalization through careful API integration with their existing platform led to a 60% increase in engagement.

Successful AI integration depends on ongoing measurement and optimization. Organizations that establish clear KPIs and track ROI consistently outperform those that implement AI without strategic alignment. The examples of Shine's AI transcription system saving providers two hours daily and Keller Williams' Command platform engaging 170,000 quarterly active users highlight the tangible benefits of thoughtful integration.

As technology evolves, your approach to AI integration must adapt too. Legacy systems present unique challenges, but they also contain valuable data and proven workflows that, when enhanced with AI capabilities, can deliver exceptional business value. The question isn't whether to integrate AI into legacy systems but how to do so effectively, a question this guide has aimed to answer with practical, proven strategies that work.